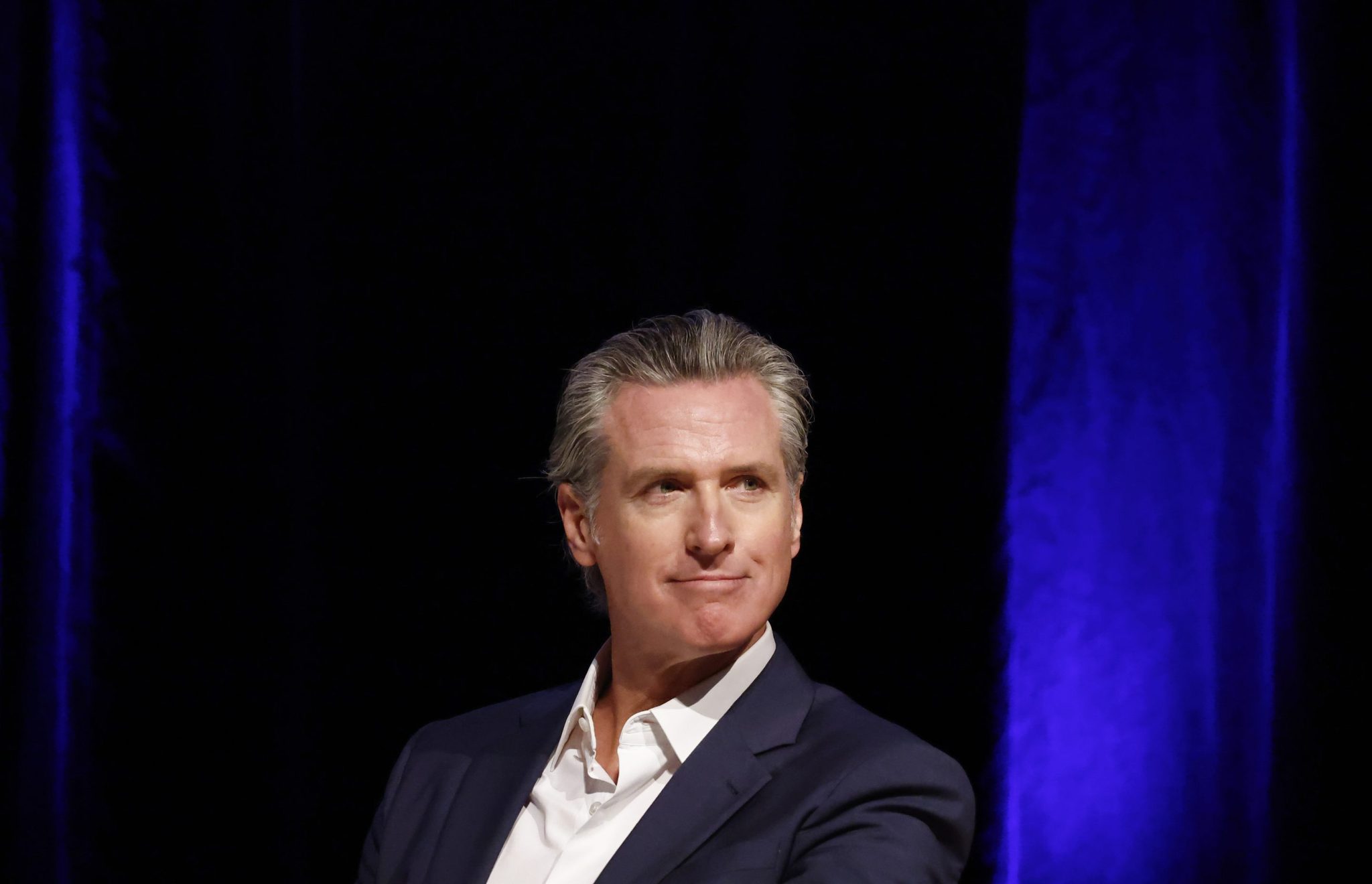

California Governor Gavin Newsom signs Landmark Ai Law Law SB 53

California has taken an important step towards the regulation of artificial intelligence with Governor Gavin Newsom signing a new law of the State which will require major IA companies, many of which have its registered office in the State, to publicly disclose how they predict the potentially catastrophic risks posed by advanced AI models.

The law also creates mechanisms to report critical security incidents, extends the denunciation protections to AI company employees and initiates the development of Calcomptute, a government consortium responsible for creating a public computer cluster for research and innovation in terms of safe, ethical and sustainable AI. Through convincing companies, including Openai, Meta, Google Deepmind and Anthropic, to follow these new home rules, California can effectively establish the standard for AI monitoring.

Newsom has developed the law as a balance between protecting the public and encouraging innovation. In a press release, he wrote: “California has proven that we can establish regulations to protect our communities while ensuring that the growing AI industry continues to prosper. This legislation concludes this balance.”

The legislation, written by the Senator of the Scott Wiener State, follows a failed attempt to adopt a similar AI law last year. Wiener said that the new law, known by the SB 53 stenography (for the Senate bill 53), focuses on transparency rather than the responsibility, a departure from its previous bill SB 1047, that Newsom opposed its veto last year.

“The passage of SB 53 marks a notable victory for California and the AI industry as a whole,” said Sunny Gandhi, Vice-President of Political Affairs at Locoding AI, a Coparrain of SB 53. “By establishing transparency and responsibility measures for large-scale developers, the most powerful models are not confronted with the disintegrated approach. opens the way to a competitive, safe and respected ia ecosystem on a global scale. »»

Industry reactions to new legislation have been divided. Jack Clark, co -founder of the company AI Anthropic, which supported SB 53, wrote on X: “We applaud (the governor of California) for the signing (Scott Wiener) SB 53, establishing transparency requirements for the companies of the frontier AI which will help us all to have better data on these systems and the companies that build them. Anthropic is proud to have supported this bill. ” He pointed out that, although federal standards are always important to prevent a patchwork of state rules, California has created a framework that balances public security with continuous innovation.

OPENAI, who did not approve the bill, told the media that it was “happy to see that California had created a critical path to harmonization with the federal government – the most effective approach to IA security”, adding that if it was properly implemented, the law would allow cooperation between federal governments and state governments on the deployment of AI. Meta spokesperson Christopher Sgro also told the media that the company “supports balanced AI” regulations “, calling SB 53” a positive step in this direction “, and said that Meta is looking forward to working with legislators to protect consumers while promoting innovation.

Despite being a law at the state level, Californian legislation will have a global scope, because 32 of the top 50 companies in the world are based in the state. The bill obliges AI companies to report incidents at the California emergency services office and protect the reporters, allowing engineers and other employees to raise security problems without risking their career. SB 53 also includes civil sanctions for non-compliance, enforceable by the State Prosecutor General, although IA political experts and Miles Brundine noted that these penalties are relatively low, even in relation to those imposed by the EU AI.

Brundage, who was previously head of political research in Openai, said in a post X that if SB 53 represented “a step forward”, there was a need for “real transparency” in reports, stronger minimum risk thresholds and technically robust third -party assessments.

Collin McCune, head of government affairs in Andreessen Horowitz, also warned that the law “risks pressing startups, slowing innovation and putting the greatest players” and declared that it establishes a precedent dangerous for state regulations. “Several AI companies that have pressure against the bill also made similar arguments.

California aims to promote transparency and responsibility in the AI sector with the requirement of public disclosure and incident reporting; However, criticisms like McCune argue that the law could make conformity difficult for small businesses and attract the domination of Big Tech AI.

Thomas Woodside, co -founder of the Secure AI project, a co -tackle of the law, called concerns about “excessive” startups.

“This bill only applies to companies that form AI models with a huge amount of calculation that costs hundreds of millions of dollars, which a small startup cannot do,” he said Fortune. “It is to point out very serious things that go wrong, and the denunciation protections, and a very fundamental level of transparency. Other obligations do not even apply to companies that have less than $ 500 million in annual income. ”

https://fortune.com/img-assets/wp-content/uploads/2025/03/GettyImages-2202176003-e1741106904647.jpg?resize=1200,600